How I Built a Production App with Claude Code

The follow-up everyone asked for...

After I published “I Went All-In on AI. The MIT Study Is Right.”, my inbox exploded. That article came from three months of forcing myself to use only Claude Code - AKA the experiment that nearly cost me my confidence as a developer.

But you wanted more. “HOW did you actually do it?” “What was your process?” “What worked and what broke?”

Fair questions. So here’s the detailed breakdown of those 60+ hours, including the livestreams where you can watch me argue with Claude about why disabling tests isn’t a viable solution.

This Article Has an Expiration Date

Let’s get something out of the way: by the time you read this, Claude Code will have shipped five new features that “solve” everything I’m about to describe. Someone will comment, “You should have used Claude’s new context-perfect-memory-agent-system!” or whatever they release next week.

That’s fine. Actually, it’s the point.

I’m not writing this to give you the ultimate Claude Code playbook that will work forever. The pace of AI tool evolution makes that impossible. What worked last month is outdated today. What I’m documenting here worked (until it didn’t) in September 2025.

What I’m giving you is something more valuable: a look under the hood at what actually happens when you push these tools to their limits. The features will change. The token limits will grow. The agent systems will get fancier.

But the fundamental challenges? The context complexity? The non-deterministic behavior? The architectural drift? Those aren’t going away with a feature update. They’re baked into how these systems work.

So when you read this in six months and Claude Code has quantum-context-management or whatever, remember: I’m showing you the patterns, not the tools. The tools will evolve. The problems will remain remarkably consistent.

Now, let’s talk about what actually happened.

The Pattern We All Notice

Week one was magic. I was flying. Features that would have taken me hours took minutes. Claude Code was cranking out components, APIs, database schemas - everything just worked.

Week three? Different story. Small changes started taking longer than writing from scratch. Claude would fix one thing and break two others. My prompts grew from paragraphs to essays just to maintain context.

By week six, I was spending more time managing Claude than coding. Something was fundamentally broken, but I couldn’t put my finger on it.

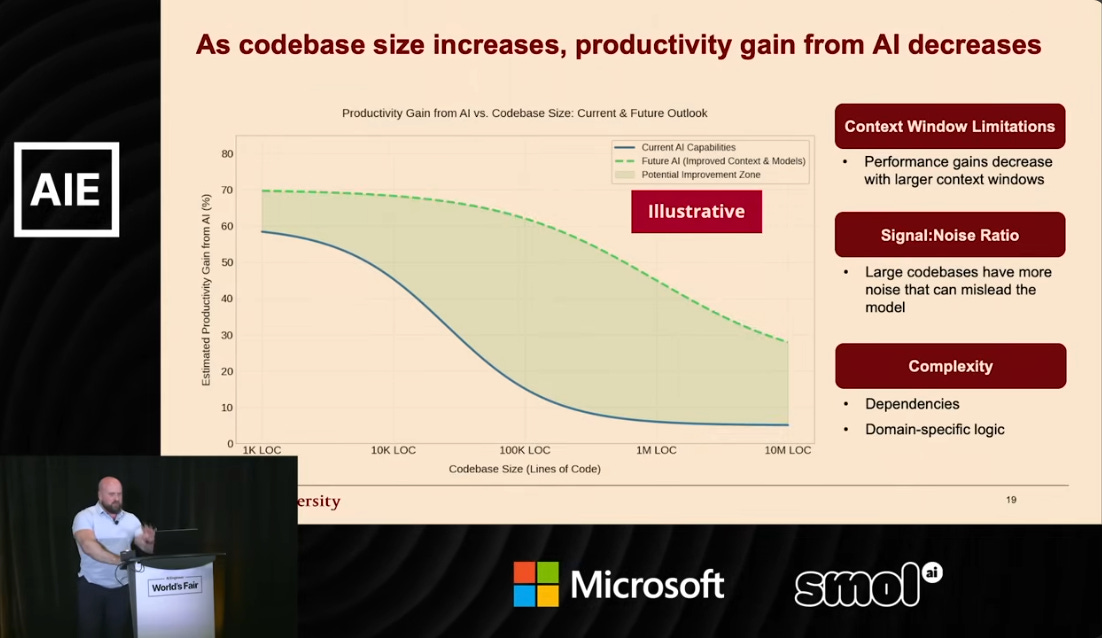

Then Stanford researchers released their findings. After studying 100,000+ developers across 600 companies, they discovered what I was living: AI productivity gains tank as your codebase grows. At 10,000 lines of code? You’re flying with 60% productivity gains. At 100,000 lines (where Roadtrip Ninja sits now)? Those gains cratered.

The chart explained everything. It wasn’t that Claude was getting worse - it was that context complexity grows exponentially, and neither Claude nor I were prepared for it.

This mirrors exactly what happens with human teams. The difference? I’ve spent 25 years learning to manage that complexity. Claude hasn’t.

Six Realities I Had to Accept

1. LLMs Are Just Like Your Dev Team

Give Claude the same prompt twice, and you get different outputs. Sound familiar? That’s your senior developer on Monday versus Friday. The difference is that your senior dev has learned to manage that variability through experience. Claude gives you raw unpredictability at light speed.

2. Requirements Are Still The Biggest Challenge

After 50 years in this industry, we still can’t get requirements right every time. AI doesn’t fix this - it amplifies it. When commenters said “just tell Claude to make the changes,” they missed the point entirely. If we still struggle to write clear requirements for humans, we can’t expect AI to intuit the missing pieces of information it needs to succeed.

Yes, I am aware of “Spec Driven Development”. I can hear people screaming its name at me, including those who actually did in the comments. However, spec-driven development isn’t anything new. It’s just a fancy name for a well-defined, and maintained product backlog. Anyone who has worked with me KNOWS I am gonna have a healthy backlog at all times. 💪

3. Your Tests Will Fail (Because Everyone’s Tests Suck)

“Just do TDD!” Boy oh boy, did I hear that one from A LOT of commenters. Let’s take a step back. How many companies actually nail TDD? Maybe 5%, on a good day?

Claude is trained on real-world code. Real-world code has crappy tests. Result? Claude regularly told me “MOST of the tests are passing” or simply disabled the failing ones. I spent hours with my sleeves rolled all the way up to my shoulders, untangling test suites that looked exactly like what most companies ship.

4. Context Windows Are Marketing BS

Claude can handle 200k tokens? Great. Can it use them effectively? Hell no.

I tried everything - agents, compartmentalization, careful context management. The reality? I was massively better at managing context than Claude. And I’m just one guy with a brain, not a billion-dollar AI model.

5. Human Team Patterns Work (Because They Have To)

Every pattern I’ve taught dozens of organizations about building engineering teams over 25 years? Essential for AI. Actually, more essential. Your human developers can read between the lines, connect dots, and ask clarifying questions. Claude does exactly what you type. Nothing more, nothing less.

6. Document or Die

I documented everything in GitHub issues. Not for posterity. For survival. Without those issues serving as context containers, I’d have been completely lost by week two.

Again, what works for humans works for AI.

The System That Delayed the Inevitable

Let me be clear: this system helped. It pushed back the productivity cliff. But at 100,000 lines of code, the ROI on my time still fell off that cliff. That’s why I wrote the original article - because even with the best practices, the best documentation, the best agent setup, I still hit the wall.

But here’s what bought me time:

Three Agents, Clear Roles

When Claude Code launched Agents mid-experiment, I built this structure:

Product Owner Agent: Researches requirements, writes acceptance criteria, documents everything

Architect Agent: Defines approach based on our standards, adds technical specs to issues

Engineer Agent: Takes the combined context and builds

This worked... until about 70,000 lines. Then, even the agents started losing context between handoffs.

Eight Files That Saved My Sanity

API-AUTH.md - How we handle authentication

ARCHITECTURE.md - System design decisions

CLAUDE.md - The master file, referenced in EVERY prompt

DEVELOPMENT.md - Coding standards

FRONTEND.md - UI patterns

GIT-WORKFLOW.md - How we ship code

TECH-STACK.md - What we use and why

TESTING.md - How we verify things work

Here’s the maddening part: I referenced CLAUDE.md in EVERY. SINGLE. PROMPT. Didn’t matter.

Claude would acknowledge the standards, quote them back to me, then completely ignore them in the implementation. Same prompt, same context, same documentation - different architectural decision each time. That non-deterministic behavior meant I couldn’t trust anything without reviewing every line.

Early on, maybe 90% of the time, Claude followed the standards. By 70,000 lines? Flip a coin. Claude would randomly decide to implement authentication differently, switch database patterns mid-feature, or restructure the entire frontend component hierarchy because it “seemed better.”

The documentation helped, but only if I caught Claude in the act. Miss one freelance decision and it would compound - future agents would see the deviation and think it was the new pattern.

The Workflow That Eventually Broke

Keep Work Items Small: Using our agents, I broke each feature down into multiple work items, AND I asked Claude to only deliver one piece of that work item at a time. First, the requirements. Then the architectural approach. Finally, the product.

Test Everything Manually: This became my full-time job. Skip it and you get “stick drift” - your app slowly veers off course. By 100k lines, I was testing more than coding. Every session started with “What did Claude break while trying to fix that other thing?”

Quadruple Code Reviews:

Claude Code reviews the PR

GitHub Copilot reviews it

Code Rabbit reviews it

I review it

They each catch different things. Just like human reviewers would.

When Reviews Come In: I asked Claude to read ALL the comments and build a response plan. What are we fixing now? What becomes a GitHub issue for later? That issue preserves the context for when you revisit it.

The Breaking Point

Here’s what the evangelists won’t tell you: every mitigation strategy has a ceiling.

My eight documentation files? Claude would quote them while doing the opposite. My three-agent system? They began contradicting each other and themselves. My small work items? Even one-point stories became multi-hour battles.

At 100,000 lines, I was no longer using AI to code. I was managing an AI that was pretending to code while I did the actual work. Every prompt was a gamble. Would Claude follow the architecture? Would it remember our authentication pattern? Would it keep the same component structure we’ve used for 100 other features?

Roll the dice.

That’s when I wrote the original article. Because I realized I’d become part of the 95% failure rate - not through lack of trying, not through poor practices, but because the fundamental model breaks down at scale. The non-deterministic nature that seems quirky at 1,000 lines becomes a nightmare at 100,000.

My Verdict (November 2025 Edition)

Here’s where I’ve landed after 100,000+ lines of code and more hours arguing with Claude than I care to admit.

AI tools like Claude Code aren’t a silver bullet. Shocking, right? There’s still no easy button. Building great products is hard. Always has been. Always will be. Anyone selling you the “build a unicorn in a weekend” dream is full of it.

Here’s where AI crushes EVERYTIME:

I can stand up a clickable prototype in minutes. Not hours. Minutes. These aren’t garbage mockups either - they’re functional enough that clients can actually feel the product. We’re validating product-market fit at breakneck pace. Ideas that would have taken weeks to test now take an afternoon. That’s not hype. That’s my Tuesday.

Here’s how I code with AI today:

AI is my sidekick. Robin to my Batman. It’s there when I need to bounce ideas, debug a gnarly problem, or generate boilerplate I’m too lazy to type. But I’m still Batman. I make the architectural decisions. I own the codebase. I understand what’s been built.

For those who don’t speak comic book: Robin eventually becomes Nightwing. Dick Grayson grows beyond being Batman’s sidekick, develops his own skills, leads his own team, and becomes a hero in his own right. No longer needs Batman to function. That’s the trajectory people assume AI is on.

Maybe Claude Code becomes Nightwing someday. Maybe it graduates from sidekick to independent operator. But we’re not there. Not even close. Right now, Claude is still Robin, occasionally trying to fight crime solo and getting his ass kicked.

This is my approach today. Will it fundamentally change? When it does - and it will - you’ll get another post dissecting what shifted and why. But the core principle won’t change: I’m the engineer. AI is the tool. The moment that flips is the moment I become redundant.

Until then, I’ll keep using AI for what it’s phenomenal at:

Rapid prototyping that actually works

Boilerplate generation that saves hours

Debugging assistance when I’m stuck

Testing edge cases I wouldn’t think of

Documentation nobody wants to write

And I’ll keep doing what only I can do:

Own the architecture

Make the hard decisions

Maintain the context

Understand the why

Take responsibility when it breaks

That’s not sexy. That’s not revolutionary. But it’s real, it works, and it’s sustainable beyond the honeymoon phase.

Your Move

Stop expecting magic. Start engineering.

Want production-grade enterprise software built with AI? You need:

Clear, explicit requirements (harder than you think)

Small, focused work items (just like with humans)

Manual testing (yes, with your actual hands)

Multiple review perspectives (AI reviewers catch different things)

Obsessive context management (this is your new full-time job)

Documentation that serves as guardrails (not optional)

After 60+ hours and 100,000 lines of code, Roadtrip Ninja is live. Could I have built it faster myself? It’s a toss-up. But I’ve learned something more valuable: how to make AI development work when the honeymoon ends.

The tools are powerful. The productivity gains are real (even when they decline). But the human-powered engineering? That’s eternal.

Stay courageous,

Josh Anderson

The Leadership Lighthouse

I should have read this 3 months ago 😂 you are spot on. Same experience with a lying, cheating, lazy assistent. Intelligent eh, as a stone. Lessons learned, be Nazi, break up the code in max 30 k line modules, with clear relations to other parts, and it actually nears bliss, but never sleep. It will deceive and betray you any minute.

Thanks for the great pair of articles! I suspect that human developer efficiency decreases as the codebase grows, even without AI. I couldn't find any related studies as detailed as the Stanford AI study you referenced. But I've seen this correlation with the large number of applications I've worked on over the years. One of my takeaways is that keeping codebases smaller and more focused is better. Whether your teams are human or not.